Abstract

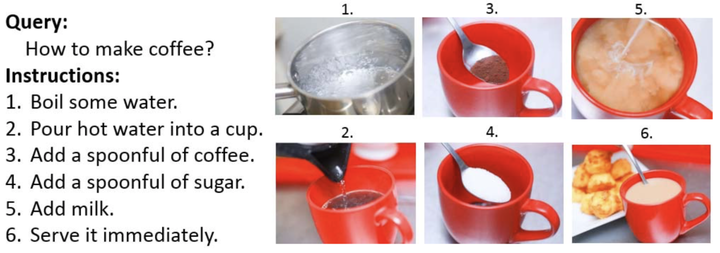

Text is the easiest means to record information but need not always be the best means for understanding a concept. In psychological theories, it is argued that when information is presented visually, it provides a better means to understand a concept. While techniques exist for generating text from a given image, the inverse problem that is to automatically fetch coherent images to represent a given set of instructions (sequence of text), is a hard one. In this paper, we present a novel multistage framework to convert textual instructions into coherent visual descriptions (text instructions annotated with images). The key components in the proposed approach are - (i) novel framework, which combines the text as well as image analysis to generate visual descriptions; (ii) ensure coherency across visual descriptions, using a combination of deep learning and graph based approach. Effectiveness of our proposed approach is shown through a user study on a dataset of instructions and corresponding images collected from WikiHow website.