Abstract

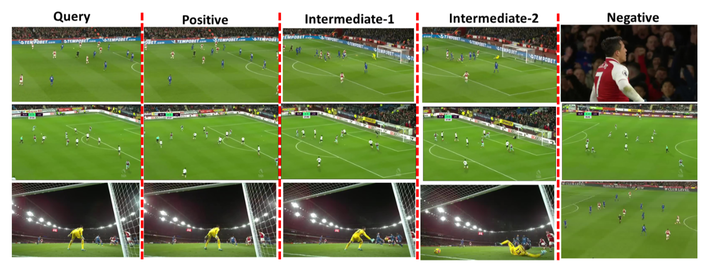

Critical events in videos amount to the set of frames where the user attention is heightened. Such events are usually fine-grained activities and do not necessarily have defined shot boundaries. Traditional approaches to the task of Shot Boundary Detection (SBD) in videos perform frame-level classification to obtain shot boundaries and fail to identify the critical shots in the video. We model the problem of identifying critical frames and shot boundaries in a video as learning an image frame similarity metric where the distance relationships between different types of video frames are modeled. We propose a novel pentuplet loss to learn the frame image similarity metric through a pentuplet-based deep learning framework. We showcase the results of our proposed framework on soccer highlight videos against state-of-the-art baselines and significantly outperform them for the task of shot boundary detection. The proposed framework shows promising results for the task of critical frame detection against human annotations on soccer highlight videos.