Abstract

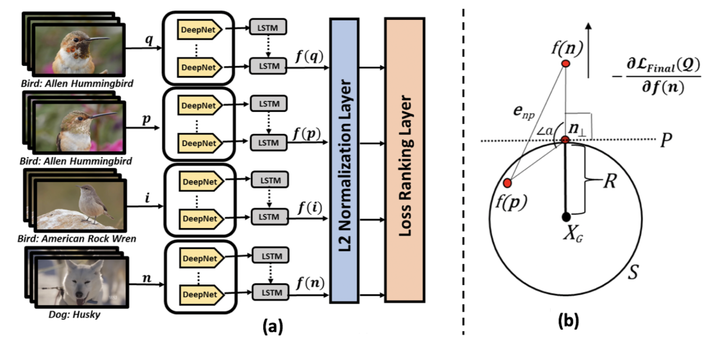

In this paper, we propose the Radial Loss which utilizes category and sub-category labels to learn an order-preserving fine-grained video similarity metric. We propose an end-to-end quadlet-based Convolutional Neural Network (CNN) combined with Long Short-term Memory (LSTM) Unit to model video similarities by learning the pairwise distance relationships between samples in a quadlet generated using the category and sub-category labels. We showcase two novel applications of learning a video similarity metric - (i) fine-grained video retrieval, (ii) fine-grained event detection, along with simultaneous shot boundary detection, and correspondingly show promising results against those of the baselines on two new fine-grained video datasets.

Type

Publication

In ICASSP 2019 - 2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)